Discovering a fascinating Computational Universe

With this post I decided to publish some of my personal notes and reflections on Quantum Computing and the idea of a Computational Universe. A reader interested in this topic will find a good starting point reading the book Programming the Universe by Seth Lloyd, professor of mechanical engineering at the Massachusetts Institute of Technology, and in the articles Ultimate physical limits to computation and The Universe as Quantum Computer, written by the same author. For more insights, please consult the footnotes as usual.

##Not only pulp and seeds into an apple To start the discussion, let’s consider a simple apple. We know that it carries some kind of information related to its physical nature (e.g. skin color, size, taste ecc. ecc.). Some questions we might be interested in could be how much information it contains or how many bits are in the apple. Well, we can say that these questions have nothing earth-shattering. An apple has really a finite number of bits. In fact, the Laws of Quantum Mechanics that govern all physical systems, ensure that each physical system with finite energy and confined in a finite volume of space, has a finite number of distinct states and thus record a finite number of bits. Thus there is a finite number of bits that can be used to represent the microscopic state of an apple and its atoms.

Now try to extend this concept to every atom, molecule, bacteria, living organisms, star, planet and galaxy. Thus Seth Lloyd’s thesis consists in this different vision of every physical system and its dynamic. In particular, LLoyds states that the natural dynamic of each physical system can be thought of as a computation, in which a single bit not only stores a 0 or a 1, but also acts as if it were an instruction:

-

the Universe is the greatest thing that there is, and a bit the smallest piece of information;

-

the universe is made of bits, since each molecule, atom, or element records information;

-

every interaction among these pieces of the Universe processes this information by altering these bits;

-

the result is that the Universe itself computes, and since it is governed by the laws of Quantum Mechanics, it computes in a quantum way. The universe is a quantum computer.

With this view of the Universe, the importance of a every single bit will depend not only by its individual value, but also how it impacts with the other bits in the Universe. All bits are equivalent in terms of the amount of information that they can carry. The information is physical, because all physical systems record information. But some bits are more important than others: different bits play different roles in the Universe, but the amount and importance of this information varies from bit to bit. An important question is if exists a mathematical way to quantify the importance of these bits.

If we accept the Computational Nature of the Universe, it is legitimate to ask what the Universe is calculating. The answer is itself, his own behavior. The quantum-computational nature of the Universe determines that all future details are inherently unpredictable. They can be calculated only by a computer whose dimensions are the same of the Universe itself. Otherwise, the only other way to find out is to wait for the future to happen.

##Quantum Computing Quantum mechanics is the branch of Physics that studies the matter at very small scales. To make an analogy, quantum mechanics stand to atoms as classical mechanics stands to engines. Quantum Mechanics is notoriously strange. Waves which behave as particles, particles which behave like waves, objects that can be in two different places in the same time. Niels Bohr, one of the fathers of the quantum revolution, said that quantum mechanics makes you dizzy. Take a look at the articles What is real and A Nebulous Dragon and for a brief introduction of Quantum Mechanics and its implication on the interpretation of the Nature.

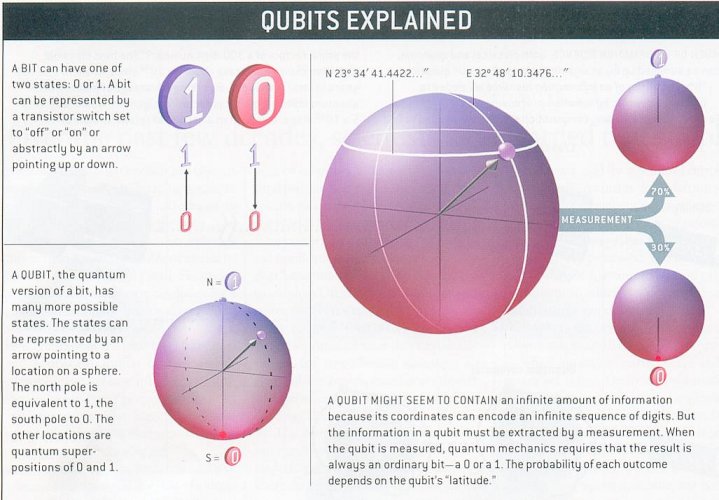

A quantum computer works using the laws of Quantum Mechanics. It is a device that incorporates the same abilities of information processing attributed to atoms, molecules or chemical elements. Quantum computers exploit the weirdness of Quantum Mechanics to perform tasks that are very complex for classical computers. Since a quantum bit, or qubit is able to record both 0 or 1 at the same time, while a bit in a classical computer can record only 0 or 1, a quantum computer can perform millions of operations simultaneously. For a quantum computer does not make sense the classical distinction between digital or analog computer, as in classical computers. It is both digital and analog in the same time.

######Qubits explained(Credit: https://universe-review.ca

A quantum computer is the democracy of the information, since each element (atom, electron or photon) participates in a fair manner to the storage and processing of information. Historically, have been Paul Benioff, Richard Feynman and David Deutsch whom started to talk about Quantum Computers in the early ’80s. A quantum computer was a total abstraction, nobody had the slightest idea on how to build them.

Nowadays, quantum technologies allow to manipulate matter at the atomic scale, and quantum computers represents the last level of miniaturization. But remember that atoms are not the only ones able to record and process information. Photons (particles of light) and phonons (particles of sound), quantum dots (a sort of synthetic atoms), superconducting circuits, and all others microscopic systems are able to record information. Someone able to speak atoms’ language, will be also able to instruct them to process this information for him. A physical systems, in fact, speak a language whose grammar is the Laws of Physics.

##The role of the information in the Universe Answer a question like how much is generally easier than answering a question what it is. The abilities of the Universe to process information has grown through the centuries thanks to a series of information processing revolutions: creation of life, sexual reproduction, language, writing, printing and computing. Every atom, every single elementary particle records information. Each collisions between them, each dynamic change in the universe, no matter how small, processes this information.

The computational capacity of the Universe is subject to all subsequent revolutions on information processing. In a quantum computer, every atom records one bit. In the Universe, there are about 10 to the 90 particles. This means that is possible to assign a unique 300-bit long code to each particle.

For several centuries, philosophers have tried to understand what means the ‘meaning’, with fair results. The problem is that the meaning of a message depends a lot on how the information must be interpreted. For computers, this ambiguity is a bug. Instead for humans, this ambiguity is a bonus. Although the meaning is rather complicated to define, it is the most important characteristic of information.

What is the more generic definition that we can give to a computer? A machine for processing information seems to be the most common definition. However this is a fairly broad definition, since implies a lot of things can be a computer: DNA, our brain, the abacus, also our own fingers. Calculus is the Latin word for pebble; indeed the first computers were rocks. The Stonehenge were used to calculate the calendars and planets alignments. The invention of the abacus has been a revolution: a computer made of wood which incorporates a fundamental mathematical abstraction: the zero number. The word zero comes from Shunya, a sanskrit word which means an empty thing.

One of the greatest achievement in Computation History, obtained by the English mathematician George Boole, is the proof that any logical expression or computation can be encoded in a logic circuit. However, a rather counterintuitive result of the logic operations inside a computer, is that the computer’s behavior is unpredictable. In other words, the only way to know if the computer will give you an output when a computation starts, is to wait for it to finish (if it will end).

In 1930, the Austrian logician Kurt Gödel proved that any sufficiently powerful mathematical theory has predicates that, if false, would make the theory inconsistent, and that cannot be proven. Gödel’s incompleteness theorems have deep implications for various issues, like Pure Mathematics, Minds and machines.

Consider the famous halt-problem:

-

program a computer

-

execute a program

Does the computer will stop or not? Or will it run forever?

Gödel proved that there is no automatic procedure to calculate an answer for these questions. The capacity of self-reference automatically leads to a paradox in the logic. In the same period, the British matematician Alan Turing proved that self-reference leads to non-computability in computers.

Because of computers possess both the reasoning and self-reference abilities, their actions are inherently inscrutable.

###Further Information

Programming the Universe, by Seth Lloyd

Ultimate physical limits to computation, by Seth Lloyd

The Universe as Quantum Computer, by Seth Lloyd

Quantum Computing, Wikipedia.org

The Road to Reality: A Complete Guide to the Laws of the Universe, by Roger Penrose

Dance of the Photons: From Einstein to Quantum Teleportation

Quantum mechanics, Wikipedia.org